Claude 3.7 Sonnet vs ChatGPT 4o: A Hands-On Comparison for AWS Graviton Calculator Development

One prompt, three platforms - who delivered the better code?

Part 1: Why This Tool?

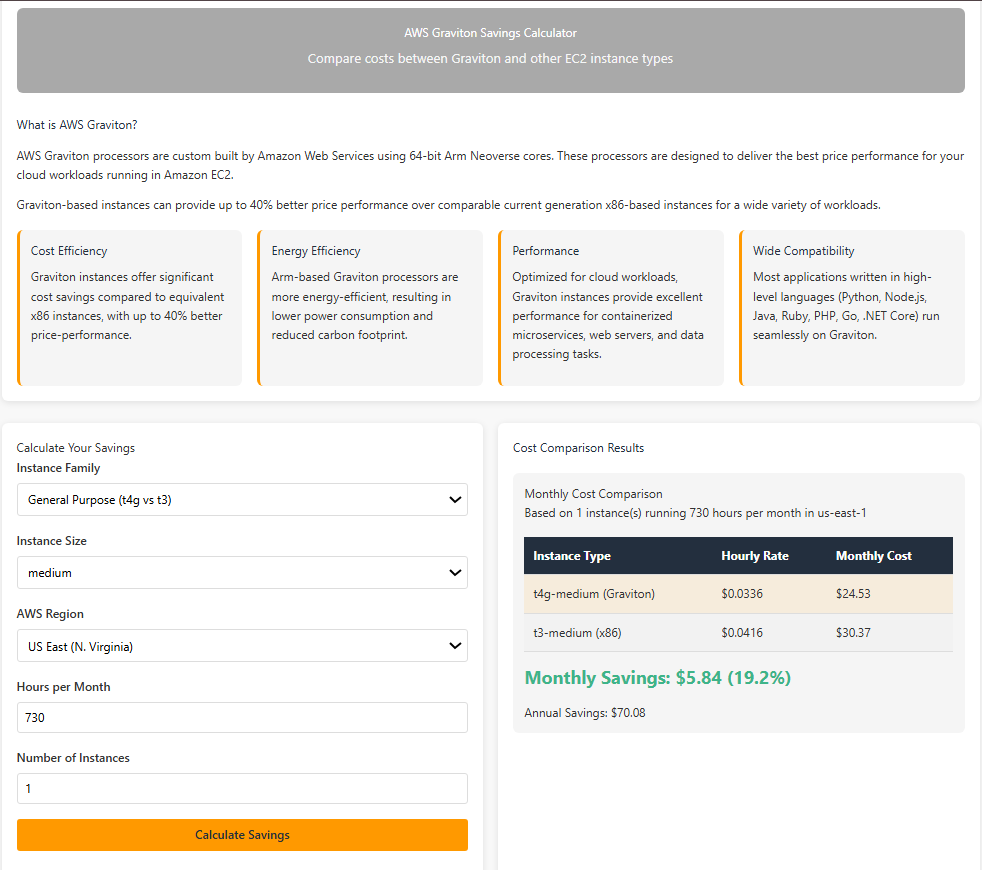

In one of my earlier articles, I argued that AWS Graviton should be your first choice for compute - mainly because it's roughly 40% cheaper than other EC2 instance types. That got me thinking: why not build a simple calculator that compares Graviton with the rest?

The official AWS calculator? Way too complex for what I had in mind. I wasn't trying to reinvent the wheel - just build a focused tool to back up my recommendation with quick, side-by-side cost comparisons.

Separately, I had written about a ThoughtWorks report that claimed the productivity gains from AI tools were capped at around 15%. A friend pointed out that the report (released in Feb 2025) came before the Claude 3.7 release - and nudged me to give it a shot.

That resonated. I've always believed "vibe coding" is just another way of saying "code as content." So with that in mind, I figured - why not use Claude and see how quickly I could spin up a lightweight Graviton calculator?

Part 2: Baseline with ChatGPT 4o

I have been using ChatGPT for a long time so I did want to do a baseline version with it. So I went to ChatGPT & gave the following sequence of prompts

Prompts

Recently I wrote about aws graviton how it is cheap etc.

Now as a follow up I want to write a javascript based tool which compares

graviton instances with our ec2 instances and shows the saving.

We will need to group instances as small medium large so is apple to apple

comparision.

We can keep the data in indexdb. Ui should be as slick.

Strictly client side tool only….

Let's filter on basis of region and large medium small

and also support all types and regions

Is it possible for you to demo

Part 3: Using Cline + Claude 3.7 Sonnet

Next, I turned to Claude with the same prompts, aiming for an apples-to-apples comparison. I hit a snag - Claude website wasn't letting me access Claude 3.7 Sonnet model at the time (possibly a temporary limit), and I was eager to finish the project.

That's when I switched to VS Code, where I had recently installed Cline. It appeared to be using Claude 3.7 Sonnet under the hood.

Trying Cline was already on my to-do list, so I gave it a shot using the same prompt:

Cline did a lot: understood my VS Code environment, made multiple API calls to Claude, and within 2 minutes, generated a fully functional JavaScript tool. This is what I officially released on my website. You can access the tool here.

I was blown away. Compared to what ChatGPT generated, the difference was night and day. I kept wondering - was this Claude's power or Cline's secret sauce (prompt enhancement, env awareness, etc.)?

Part 4: Using Only Claude 3.7 Sonnet (No Cline)

As soon as Claude Sonnet 3.7 access opened up again, I was back at it. I gave the exact same prompt again - this time directly into Claude.

Recently I wrote about aws graviton how it is cheap etc.

Now as a follow up I want to write a javascript based tool which compares

graviton instances with our ec2 instances and shows the saving.

We will need to group instances as small medium large so is apple to apple

comparision.

We can keep the data in indexdb. Ui should be as slick.

Strictly client side tool only….

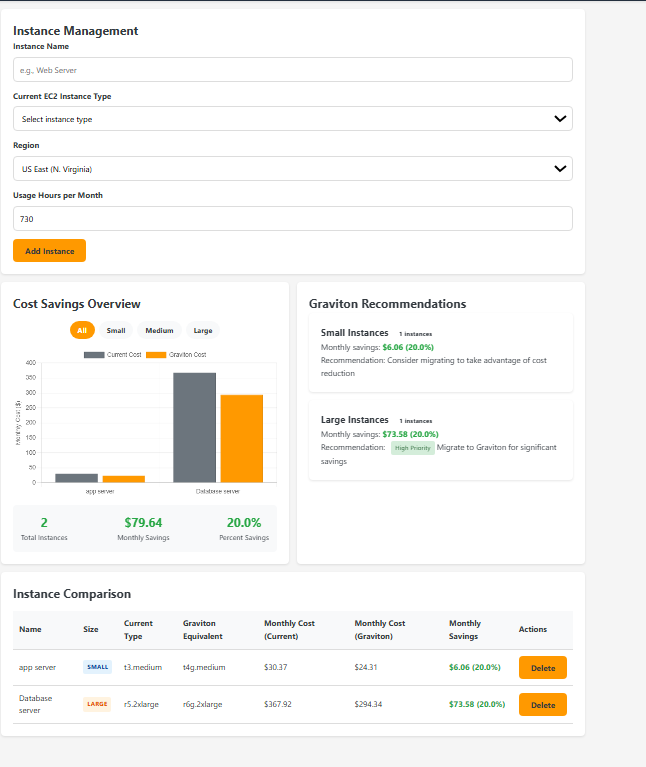

And guess what? It nailed it - again. The final output was clean, complete, and included thoughtful touches: explanations, charts, and even a polished stylesheet.

You can check out the generated tool here and for folks in a rush here is a snapshot of the tool

Closing Thoughts

Claude 3.7 Sonnet is impressive. What stood out:

- It added contextual UX details (like default styles, interactive elements).

- It explained the tool's purpose inline.

- The code quality was solid - no stitching required.

In contrast, I was a bit disappointed by ChatGPT 4o. It never generated a working tool. It missed basic UI polish (no CSS), and required more hand-holding.

Cline wasn't supposed to be part of this test - I only used it because Claude website rate-limited me. But it turned out to be an interesting variable. Initially, I assumed Cline's environment awareness or prompt enhancement was the reason for the superior result. Turns out, that wasn't the case.

Claude 3.7 Sonnet - without Cline - produced an equally strong output. This confirms what we all know but sometimes forget: AI is non-deterministic. The tools generated by "Cline + Claude" and "Claude alone" didn't match exactly. If I ran the same prompt again, I'd likely get something different - for better or worse. The same could apply to ChatGPT. It underperformed this time. Who knows what it would do tomorrow?

This was my first vibe coding experience - and it felt like a great fit for quick, disposable tools like this. A perfect use case for code as content.